[NNC] Read/Write Dependency analysis (#46952)

Summary:

Adds a new piece of infrastructure to the NNC fused-kernel generation compiler, which builds a dependency graph of the reads and writes to memory regions in a kernel.

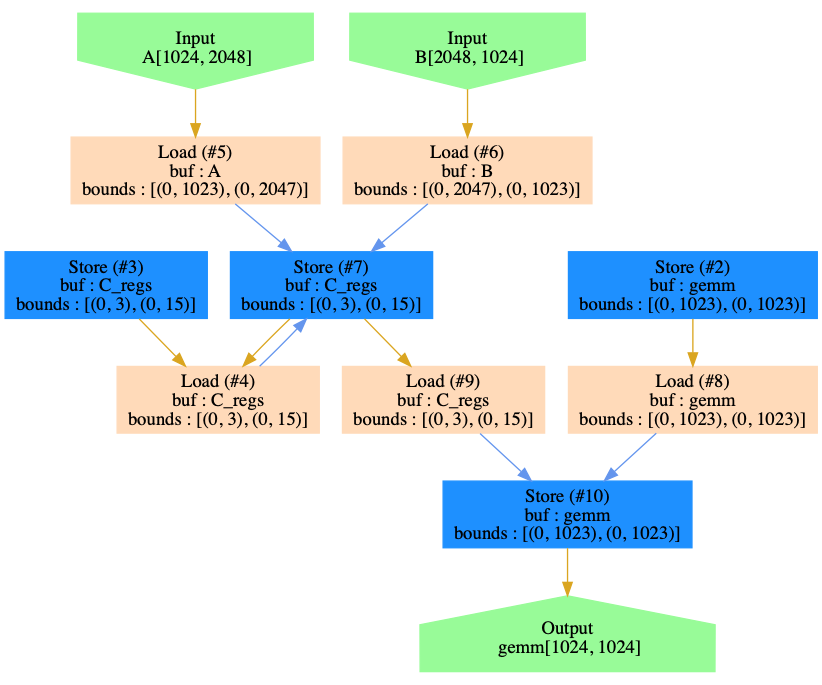

It can be used to generate graphs like this from the GEMM benchmark (not this only represents memory hierarchy not compute hierarchy):

Or to answer questions like this:

```

Tensor* c = Compute(...);

Tensor* d = Compute(...);

LoopNest loop({d});

MemDependencyChecker analyzer;

loop.root_stmt()->accept(analyzer);

if (analyzer.dependsDirectly(loop.getLoopStmtsFor(d)[0], loop.getLoopStmtsFor(c)[0]) {

// do something, maybe computeInline

}

```

Or this:

```

Tensor* d = Compute(...);

LoopNest loop({d});

MemDependencyChecker analyzer(loop.getInputs(), loop.getOutputs());

const Buf* output = d->buf();

for (const Buf* input : inputs) {

if (!analyzer.dependsIndirectly(output, input)) {

// signal that this input is unused

}

}

```

This is a monster of a diff, and I apologize. I've tested it as well as possible for now, but it's not hooked up to anything yet so should not affect any current usages of the NNC fuser.

**How it works:**

Similar to the registerizer, the MemDependencyChecker walks the IR aggregating memory accesses into scopes, then merges those scopes into their parent scope and tracks which writes are responsible for the last write to a particular region of memory, adding dependency links where that region is used.

This relies on a bunch of math on symbolic contiguous regions which I've pulled out into its own file (bounds_overlap.h/cpp). Sometimes this wont be able to infer dependence with 100% accuracy but I think it should always be conservative and occaisionally add false positives but I'm aware of no false negatives.

The hardest part of the analysis is determining when a Load inside a For loop depends on a Store that is lower in the IR from a previous iteration of the loop. This depends on a whole bunch of factors, including whether or not we should consider loop iteration order. The analyzer comes with configuration of this setting. For example this loop:

```

for (int i = 0; i < 10; ++i) {

A[x] = B[x] + 1;

}

```

has no inter loop dependence, since each iteration uses a distinct slice of both A and B. But this one:

```

for (int i = 0; i < 10; ++i) {

A[0] = A[0] + B[x];

}

```

Has a self loop dependence between the Load and the Store of A. This applies to many cases that are not reductions as well. In this example:

```

for (int i =0; i < 10; ++i) {

A[x] = A[x+1] + x;

}

```

Whether or not it has self-loop dependence depends on if we are assuming the execution order is fixed (or whether this loop could later be parallelized). If the read from `A[x+1]` always comes before the write to that same region then it has no dependence.

The analyzer can correctly handle dynamic shapes, but we may need more test coverage of real world usages of dynamic shapes. I unit test some simple and pathological cases, but coverage could be better.

**Next Steps:**

Since the PR was already so big I didn't actually hook it up anywhere, but I had planned on rewriting bounds inference based on the dependency graph. Will do that next.

There are few gaps in this code which could be filled in later if we need it:

* Upgrading the bound math to work with write strides, which will reduce false positive dependencies.

* Better handling of Conditions, reducing false positive dependencies when a range is written in both branches of a Cond.

* Support for AtomicAdd node added in Cuda codegen.

**Testing:**

See new unit tests, I've tried to be verbose about what is being tested. I ran the python tests but there shouldn't be any way for this work to affect them yet.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/46952

Reviewed By: ejguan

Differential Revision: D24730346

Pulled By: nickgg

fbshipit-source-id: 654c67c71e9880495afd3ae0efc142e95d5190df