Fix LeakyReLU image (#78508)

Fixes #56363, Fixes #78243

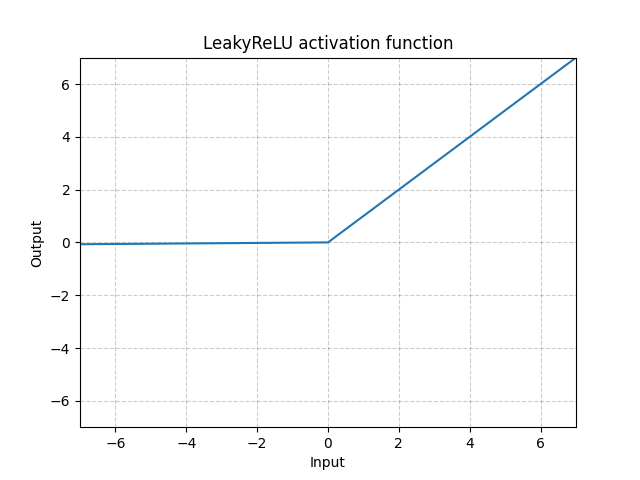

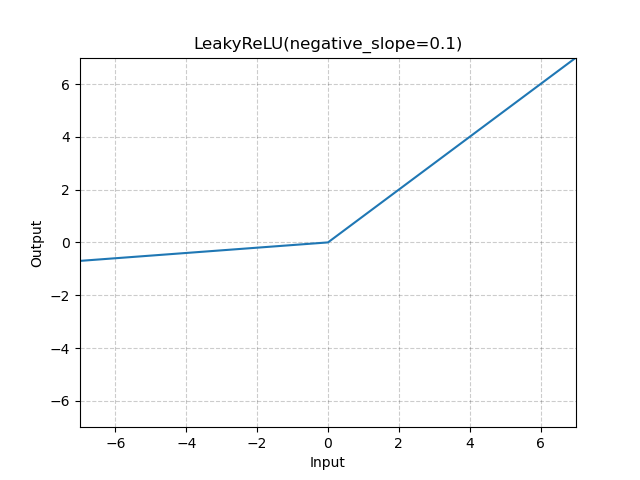

| [Before](https://pytorch.org/docs/stable/generated/torch.nn.LeakyReLU.html) | [After](https://docs-preview.pytorch.org/78508/generated/torch.nn.LeakyReLU.html) |

| --- | --- |

|  |  |

- Plot `LeakyReLU` with `negative_slope=0.1` instead of `negative_slope=0.01`

- Changed the title from `"{function_name} activation function"` to the name returned by `_get_name()` (with parameter info). The full list is attached at the end.

- Modernized the script and ran black on `docs/source/scripts/build_activation_images.py`. Apologies for the ugly diff.

```

ELU(alpha=1.0)

Hardshrink(0.5)

Hardtanh(min_val=-1.0, max_val=1.0)

Hardsigmoid()

Hardswish()

LeakyReLU(negative_slope=0.1)

LogSigmoid()

PReLU(num_parameters=1)

ReLU()

ReLU6()

RReLU(lower=0.125, upper=0.3333333333333333)

SELU()

SiLU()

Mish()

CELU(alpha=1.0)

GELU(approximate=none)

Sigmoid()

Softplus(beta=1, threshold=20)

Softshrink(0.5)

Softsign()

Tanh()

Tanhshrink()

```

cc @brianjo @mruberry @svekars @holly1238

Pull Request resolved: https://github.com/pytorch/pytorch/pull/78508

Approved by: https://github.com/jbschlosser