Upgrade oneDNN to v2.7 (#87061)

This PR is to upgrade oneDNN to v2.7.

### oneDNN v2.7 changes:

**Performance Optimizations**

- Improved performance for future Intel Xeon Scalable processors (code name Sapphire Rapids).

- Introduced performance optimizations for [bf16 floating point math mode](http://oneapi-src.github.io/oneDNN/group_dnnl_api_mathmode.html) on Intel Xeon Scalable processors (code name Sapphire Rapids). The bf16 math mode allows oneDNN to use bf16 arithmetic and Intel AMX instructions in computations on fp32 data.

Please go to https://github.com/oneapi-src/oneDNN/releases/tag/v2.7 for more detailed changes.

### oneDNN v2.6.1 & 2.6.2 changes:

**Functionality**

- Updated ITT API to 3.22.5

- Fixed correctness issue in fp32 convolution implementation for cases with large spatial size (https://github.com/pytorch/pytorch/issues/84488)

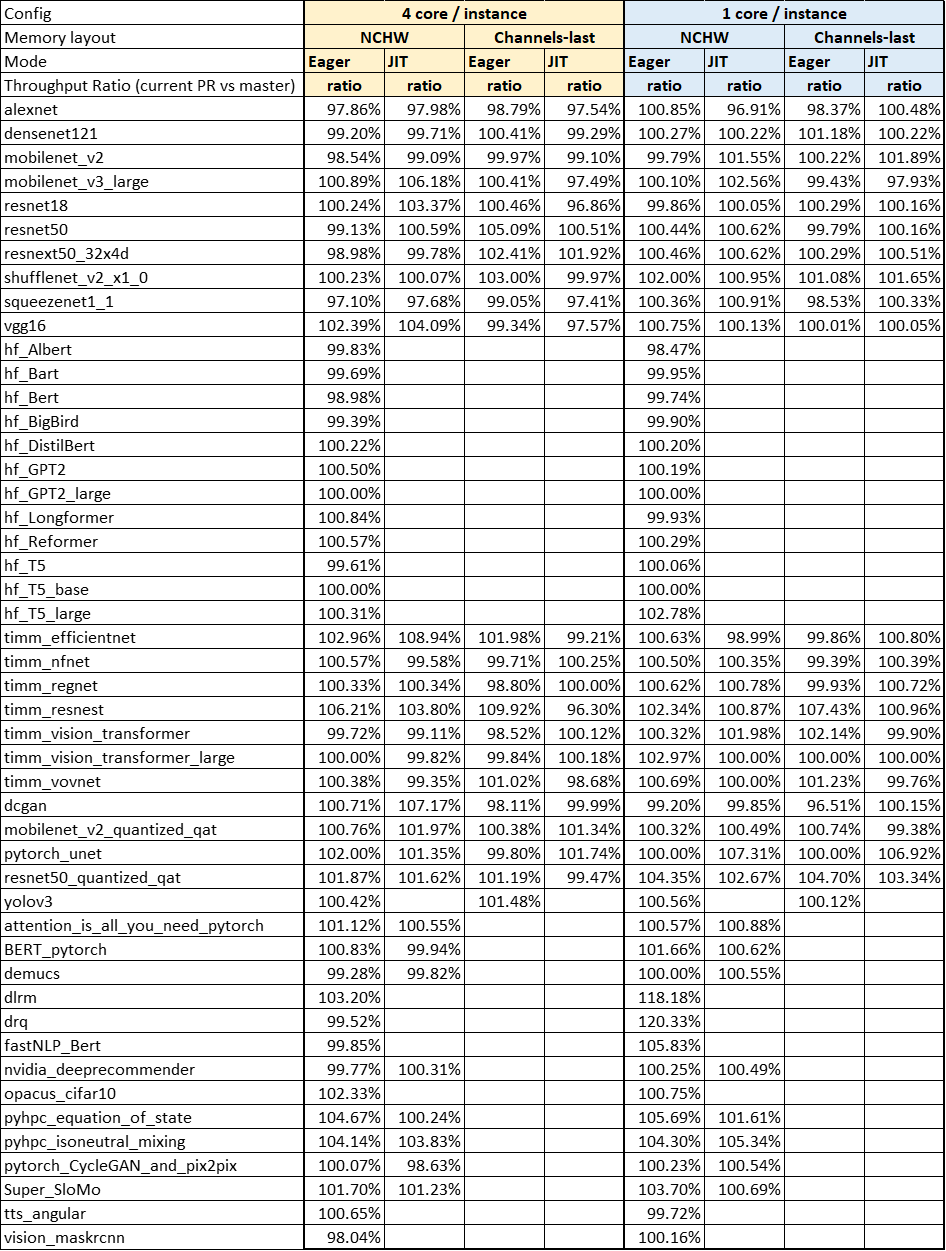

### Performance Benchmark

Use TorchBench test in ICX with 40 cores

Intel OpenMP & tcmalloc were preloaded

Pull Request resolved: https://github.com/pytorch/pytorch/pull/87061

Approved by: https://github.com/jgong5, https://github.com/XiaobingSuper, https://github.com/weiwangmeta